In recent years, deepfake technology has advanced rapidly, offering both innovative applications and raising significant ethical concerns. Among the most disturbing uses of this technology is the creation of non-consensual pornographic content, where images of unsuspecting individuals are manipulated to produce explicit material.

This misuse of deepfakes represents a severe invasion of privacy and a clear violation of personal rights, often targeting women and leading to psychological and social harm.

Overview of the Incident

A disturbing exploitation of deepfake technology was recently uncovered by the deepfake-monitoring firm Sensity. They reported a significant operation on the encrypted messaging app Telegram, where a bot was used to generate fake nude images of women.

Users of this bot would submit a photograph of a woman to the bot, which then employed deepfake technology to create and superimpose a nude image onto the original photo. The resulting images were watermarked to promote payment for ‘clean’ versions, driving a profit motive behind this invasive service.

The Magnitude of the Problem

The scale of this issue is alarming. Sensity’s investigation revealed that over 680,000 women had been targeted by this bot, with their manipulated images circulating among approximately 101,000 members across several Telegram channels.

The bot’s users were predominantly from Russia and former USSR countries, comprising about 70% of the user base, though members from the US, Europe, and Latin America were also involved.

This widespread involvement underscores the global reach and significant impact of deepfake technology when misused for creating non-consensual pornographic content.

Implications for Victims

The manipulation of personal images through deepfake technology has profound and disturbing implications for the victims. Individuals whose images are non-consensually transformed suffer from a broad spectrum of negative outcomes. Psychologically, victims often experience feelings of violation, anxiety, and a significant loss of trust in digital platforms.

Socially, the repercussions can be equally severe, leading to ostracism, harassment, and a damaging impact on both personal and professional relationships. Dr. Ksenia Bakina from Privacy International highlights that the harms are not just virtual but manifest in real-world consequences, including threats and continued abuse, underscoring the urgent need for effective responses to this misuse of technology.

Technological Background

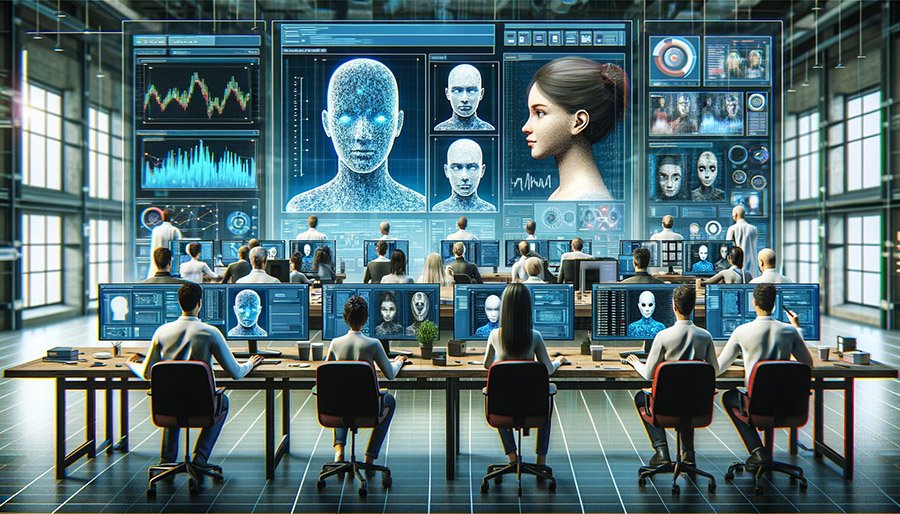

Deepfake technology relies on Generative Adversarial Networks (GANs), which are a class of artificial intelligence algorithms used in unsupervised machine learning. They operate by pitting two neural networks against each other; one generates images while the other evaluates them. Continuously improving through this internal competition, GANs can produce highly realistic images and videos.

In the case of the Telegram bot, the technology utilized was based on an open-sourced software known as “DeepNude,” which first appeared in 2019 and was designed specifically to create nude images from clothed photos. Despite attempts to shut down the original software, its code proliferated across the internet, leading to widespread misuse as seen in this incident.

Economic and Motivational Factors

The prevalent use of deepfake technology for creating pornographic content can largely be attributed to its potential for monetization. Operators of such services often offer free access to generated content with watermarks, while charging users for clean versions, as seen with the Telegram bot. This economic model incentivizes the continuous production and refinement of deepfake content.

Furthermore, user motivations as revealed by polls conducted within the Telegram community, show a disturbing trend: a significant majority expressed a desire to see manipulated nude images of women they personally know, while others sought images of celebrities or social media personalities. This indicates not only a pursuit of personal gratification but also an intent to harm and exert control over individuals’ representations and identities.

Legal and Social Challenges

The emergence of deepfakes highlights significant legal grey areas, particularly around consent and image rights. Current laws often do not specifically address the nuances of deepfake technology, making it challenging to prosecute offenders effectively.

According to Dr. Ksenia Bakina from Privacy International, although some existing laws might cover aspects of deepfake abuse, they fall short in addressing the specific issues of consent and the non-physical nature of the harm caused.

Societal attitudes also play a critical role in the proliferation of this technology. The normalization of online harassment and the objectification of individuals, especially women, contribute to an environment where such abuses can thrive.

Global Response and Solutions

In response to the growing threat posed by deepfakes, several initiatives and recommendations have been put forward to combat their misuse. Digital rights groups advocate for stronger regulations that specifically address deepfake technology, calling for laws that not only punish offenders but also protect victims from psychological and social harm.

Additionally, technology companies are being urged to develop more robust detection tools to identify and block deepfake content proactively. Internationally, some countries have begun to implement specific legislation that makes the creation and distribution of non-consensual deepfake content a criminal offense, setting a legal precedent that others could follow.

Conclusion

The issue of deepfake technology and its misuse underscores a pressing concern in the digital age. As the technology becomes more accessible and its applications more sophisticated, the potential for harm increases. The primary concerns revolve around non-consensual use, privacy violations, and the ease with which individuals can be targeted and humiliated.

Addressing these challenges requires a concerted effort encompassing awareness campaigns, technological advancements in detection, and stringent legal frameworks. Only through a multi-faceted approach can we hope to protect individuals from the profound impacts of this emerging technology and preserve digital ethics in an increasingly complex online world.

Add comment